What value do official statistics offer? The work of National Statistical Offices (NSOs), which produce the headline figures on the economy, population and environment that we see in the news every day, is driven by a conviction that such work is uniquely valuable, playing an essential role in evidence-based decision-making, for political accountability and for democracy.

For years now, those who work in this field have been investing efforts into clearly articulating, demonstrating, communicating and even quantifying this value for society, to prove their worth and justify their special role. Ask any official statistician “what’s so special about your figures?” and they will tell you that, being underpinned by the Fundamental Principles of Official Statistics, the datasets, numbers and analyses they produce are uniquely trustworthy, comparable, ethical and independent.

But a new report from UNECE’s Conference of European Statisticians, the United Nations’ highest statistical body in Europe and North America, argues that the statisticians themselves are not the ones who should try to answer the question, “what’s so special about official statistics?”.

In what could be a paradigm shift for the way that NSOs develop and evaluate their outputs, the report, Measuring the Value of Official Statistics: testing and developing a measurement framework, written by a task force of experts from the region and across the globe, makes a simple but powerful assertion: any attempt to quantify how valuable official statistics are must begin by asking what is meant by value, and whose perceived value matters.

The report argues that it can be unhelpful and even detrimental to simply assume that what makes official statistics valuable are the things official statisticians themselves think are important—accuracy, reliability, consistent use of clearly-defined concepts and internationally-agreed methods. What if the people who actually use the statistics, or those who benefit indirectly from them, in fact value something different? Maybe some want their data ultra-fast, and would even be willing to sacrifice some level of precision for the sake of speed. Maybe others value understandable graphs with clear explanations, more than very detailed data tables; while others value interoperability or machine readability that allows them to download and process datasets easily.

This isn’t to deny the importance of the Fundamental Principles, the pillars guiding the entire profession. But as the authors of the report argue, guiding principles are not necessarily what policymakers and the general public are interested in when they choose and use statistics: “Like the foreign tourist who speaks louder and louder in their own language in the hope of being understood, we risk alienating our users by simply stating our values and hoping that people will ‘get it’. More helpful would be communicating how we fulfil their criteria of value.”

The group gathered case studies and detailed reports from many countries, and looked at how value was being defined and measured in statistical offices. They found that many of the indicators being used internally to track the added value of statistical work were not really measuring value, as such. How many times something is downloaded, commented on, cited in academic literature, or ‘liked’ on social media, might give us hints as to the product’s value, but they don’t provide a clear and unambiguous measure of that value. The same is true for indicators of statistical quality, such as the extent to which statistics are produced free from errors, the speed with which errors are corrected, the amount of metadata available and the timeliness of statistical releases. These things do indeed make official statistics valuable for some users, and they are the foundation of the high quality of official statistics—but they don’t necessarily matter equally to everyone, across all kinds of statistics and all of the time.

After gathering and analyzing the evidence from many countries, the authors of the UNECE report concluded that much of what was currently being done by NSOs to assess the value of their work was being done from a production-based perspective of value—quantifying value in terms of prices, revenues and ‘willingness to pay’—an easy-to-understand view of value that corresponds with how we usually value market goods, and one that permits the NSO to measure things from the point of view of their own production of statistical products and services. The task force argued, however, that while relatively easy to understand, this perspective isn’t the best one when it comes to understanding the value of official statistics. Any attempt to quantify how valuable official statistics are should come from a consumer-based perspective, one which allows for subjective, emotional and dynamic perceptions.

Their report distils a set of key messages to guide NSOs as they embark on this shift towards a consumer-based perspective. Principal among these are:

-

NSOs need to measure their own value both to prove and to improve: as a provider of a public good, an NSO needs both to show that they are using public resources well and offering a good return on investment, and to monitor the effectiveness of their efforts so that they can continually do better.

-

To determine value the NSO must seek people’s views: official statistics producers should not assume that they know what people value in statistical products and services, without explicitly going and asking them—not just those who actively use official statistics, but also those who don’t.

-

Value is not the same thing as quality: quality is a well-defined technical concept in statistics. It amounts essentially to ‘how good the statistics are’. Value, in contrast, can only be defined with reference to the one doing the valuing, since it is a subjective assessment of that quality that makes something desirable. In other words, value, like beauty, is in the eye of the beholder.

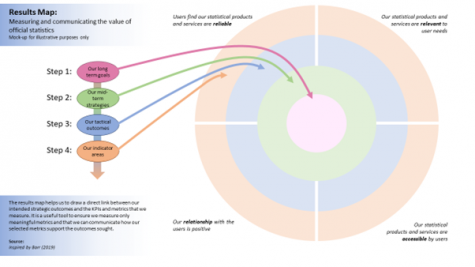

To offer a way forward, the report proposes tools that may enable NSOs to reframe their thinking. The first is a model that encourages an expanded view of customer-perceived value beyond conventional dimensions of statistical quality. The second is a method called a ‘Results Map’ which can help an NSO to define a clear path to achieving the central goals of official statistics; working outwards from core strategic goals, to measurable outcomes, to quantitative indicators of value.

The report, endorsed by the Conference of European Statisticians, is the work of an international task force of experts from national and international statistical organizations chaired by the United Kingdom’s Office for National Statistics. The group continues to work to foster this shift in thinking in official statistics, and in 2023 is aiming to gather examples of pathfinder countries that trial the new approaches, as well as communicating the key messages of the report in a condensed summary version.